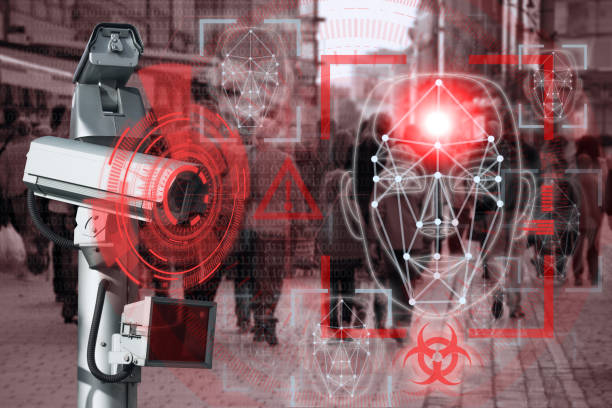

Businesses and platforms alike are facing increasing difficulty managing visual content. In 2023 alone, there were 28.08 billion images uploaded daily onto the internet – that equates to having to sort through thousands of pictures every single day! Human moderators can find it a difficult task. AI image detectors reduce the resources required for image moderation.

Content moderation has never been more essential. Artificial Intelligence-powered image detectors provide automated image analysis and filtering, providing for advanced machine learning image moderation. This method helps to overcome the limitations of manual moderation while improving content safety.

This blog discusses how AI-powered image moderators can improve the accuracy of image detection and explores their benefits.

What is an AI Image Detector?

AI image detectors work through a robust machine learning process. Computer vision and deep-learning models are used to analyze images. These systems are trained on large datasets that contain both acceptable and harmful content. These datasets provide AI with the necessary training to learn to distinguish offensive from safe content.

These systems are created using neural networks, which mimic the way our brain makes decisions. Models are trained to adjust their internal parameters in order to reduce classification errors.

How does an AI detector function?

- The AI processes the image.

- AI analyzes the image through feature extraction.

- The AI classifies images based on their different features.

- AI analyzes an image and decides if it should be approved, flagged, or blocked.

AI image detectors can evaluate images instantly, making them an invaluable asset for platforms looking to scale quickly while upholding stringent standards of content moderation.

Why Are AI Systems Necessary for Image Moderation?

Understanding the difficulties inherent to traditional image moderation is integral in answering the question “why AI for image moderation?” Manual image moderation poses many hurdles; constantly shifting content can present moderators with numerous challenges and result in unpredictable responses.

AI-powered systems operate at scale and 24/7. They ensure that harmful content does not slip through. AI can improve moderation speed because it processes thousands of images within seconds. These systems help minimize human subjectivity.

AI platforms that use content moderation offer lower error rates and more reliable moderation processes, allowing businesses to expand while still creating an environment safe for users.

Are AI Image Detectors Capable of Recognizing Malignant Content Accurately?

AI image detectors can identify harmful content accurately, as demonstrated in numerous studies and applications. Their systems can effectively flag violent or inappropriate images without needing human interaction to do so.

AI image detectors must strike a balance between false positives and false negatives when creating AI moderators for content moderation; false positives occur when safe content is flagged while false negatives happen when harmful content goes undetected.

Errors can be used to refine the accuracy of advanced machine learning algorithms, helping the system reduce errors over time by way of continuous updates and feedback loops.

AI-powered image moderation has been deployed by numerous platforms and results have improved significantly. AI is capable of quickly sorting through large amounts of data, making it indispensable in today’s digital environment.

What Are the Advantages of AI-Powered Image Moderation?

AI-driven image moderation increases business operations efficiency and user satisfaction. AI image detectors offer several advantages:

Scalability and Automation

AI systems can easily handle an increase in visual content. AI image detectors don’t require breaks or sleep breaks, making them ideal for 24/7 monitoring platforms without compromising quality or speed. Their scalability also enables platforms to expand without impacting quality or speed.

Savings and Efficiency

Automation helps businesses reduce moderator team costs by cutting down on staff size. Artificial Intelligence systems manage content review for businesses so they can use their resources more efficiently in other areas, while being faster at performing repetitive tasks than humans can.

Improved Accuracy & Consistency

Human moderators often struggle with consistency. AI systems apply the same criteria across all content, eliminating subjective decisions and bias. Consistency ensures all users are treated fairly in terms of safety and compliance issues.

How Can AI Image Detectors Increase Content Safety?

AI image detectors are crucial in maintaining standards for online communities and keeping them safe. They filter out content that violates guidelines. AI image detectors also help platforms meet legal requirements.

How AI-Powered Image Moderation Can Enhance Content Security:

- Real-time Monitoring and Filtering Solutions Available Now

AI systems analyze each image and make instant decisions based on guidelines. This proactive filtering ensures online security by reducing the harmful content users view online. - Supporting a Safer Community

AI-powered moderation makes users feel safe and respected by removing harmful images. Users enjoy a better experience when platforms remove offensive content. This can improve platform engagement and loyalty. - Assisting Human Moderators

AI systems handle the majority of content review but also assist human moderators by flagging borderline cases for further evaluation. This collaboration reduces human workload while improving content safety. - Maintain Compliance.

Many countries impose stringent laws regarding what content can be posted online, and AI image detectors help platforms comply with these laws by quickly detecting any violations – helping maintain their reputation as responsible digital spaces.

Conclusion

As visual content continues to increase exponentially, platforms must adapt in order to ensure safety, efficiency and compliance. AI-powered image detectors offer an essential solution by automating and scaling moderation processes – such as real time content analysis with reduced human workload and high accuracy – making AI image moderation revolutionary in how businesses manage digital content. By adopting this technology platform can create safer online environments while cutting operational costs and remaining compliant with global regulations.